From Coaching to Combine – A Virtual Combine Training Experience

Through a collective COVID-induced pivot in the health & fitness industry, online coaching has witnessed a major boom in the past year. Whether it was simple exercise prescription or Zoom workouts, we had found a new (temporary?) norm. Even with gyms reopening, many online coaching businesses are still thriving. While online coaching allows for much greater accessibility, it also carries the perception of providing less value than in person coaching. While this may be true, online coaching can be extremely effective. The difficulty lies in trying to truly replicate an in-person experience over the internet. Two questions one must ask themselves when attempting to succeed in coaching athletes online are:

- To what extent can we provide meaningful, high quality coaching without being physically present?

2. What valid and reliable methods can we implement and track online in order to provide a high-quality experience worth paying for?

The real challenge is in filling in the gaps of an in-person experience. Coaching high level athletes presents greater challenges, as it tends to require more specificity and a much closer coaching eye to provide meaningful feedback. High level athletes may also require greater programming flexibility and regulation. Being highly trained and having a very efficient neuromuscular system means more sensitivity to volume and fatigue.

Most recently, I found myself having to answer these questions, in addition to one very important one after taking on some new athlete clientele:

When an athlete is preparing for the most important interview of their entire athletic career, why would they opt for an online coach?

This past winter I had the opportunity to coach two USPORTS football players in their preparation for the CFL combine. Luckily, only being an hour away from their school (Acadia University), we were able to perform an initial assessment. Unfortunately, due to travel restrictions and time constraints, training together consistently became difficult. So, a purely online approach became necessary. Given the nature of the preparation, the initial assessment primarily involved getting baseline measurements for all combine specific tests.

Now, this wasn’t my first experience programming and coaching combine prep. It was however, my first time trying to provide it through online delivery. My typical approach to combine programming would involve identifying specific aspects of the athlete’s athleticism, as well as general strengths and weaknesses. Once understanding positional demands, this information helps to determine exactly how I should approach their training.

Needs Analysis for Athlete and Sport

This process should begin with considering the strengths and weaknesses, and general athletic traits of the athlete. These could be anything you consider valuable in influencing your programming as it pertains to neuromuscular and tissue qualities of the athlete. For me, I find it valuable to identify:

A. Elasticity (Reactive Strength Index): RSI is a measure of reactive jump ability of an athlete and is calculated by dividing jump height by contact time in a drop jump. Not only is this metric a great indicator of an athlete’s ability to create muscular stiffness and efficiently utilize their tendons, but it is also a great indicator of recovery. Being a test that indicates efficiency of the stretch shortening cycle (SSC), which is heavily governed by the CNS, RSI has been recognized as a valuable readiness monitoring tool. Research on elite handball players by Ronglan et al. (2006) recognized an 8% decrease in RSI as being indicative of considerable decreases in performance.

B. Eccentric Utilization Ratio (EUR): EUR is the ratio of countermovement jump (CMJ) to static jump (SJ) performance and is often used to discriminate the effect of the stretch-shortening cycle (SSC). While both are valid and reliable indicators of power output (Markovic et al., 2004), EUR can be used to better understand SSC efficiency and optimal movement and force development strategies of the individual.

These metrics are easy to obtain, identify areas in need of development, and provide a huge amount of information on how the athlete will likely respond to different exercises and contraction types. They also have strong implications for most speed & power-related tests (i.e., broad jump, vertical jump, 40yard dash). At the time of assessment, a velocity-based training device (i.e., PUSH) was used to identify and calculate these values.

C. Test Performance – Technical Inefficiencies & Position Specific Considerations

Technical Inefficiencies: While quantitative measures can be taken to provide objective feedback and create a more complete picture of a given test, this is going to be a largely qualitative component. When you know what questions are going to be on the test, you study those questions. While each combine test has different technical requirements, each should be carefully practiced maximizing results. In the pro agility – is the athlete turning their hips early enough into the transition? Where do they plant their front and back foot? Do they maintain an aggressive shin angle during the plant? How many steps do they take from first to second transition, and what speed should they attempt to reach to maximize transition efficiency? All these things should be considered when breaking down and practicing the pro agility. When it comes to test performance, improving outputs alone aren’t enough to truly maximize test performance.

Position Specific Considerations: While the aim should always be to perform highly on all tests, the reality is that some tests carry more weight than others based on the athlete’s position. Specific tests have been shown to implicate higher draft status (McGee & Burkett, 2003; Hartman, 2011), as well as success at the professional level (Sierer et al., 2008). A recent study by Cook et al. (2020) analyzed the relationship between the NFL combine and game performance over a 5-year period. The most performance indicative tests for each position were as follows:

Defensive Back: 40-yard dash, Vertical Jump

Defensive Line: Pro Agility

Line Backer: 40-yard Dash, Pro Agility

Offensive Line: Pro Agility

Running Back: Broad Jump

Tight End: 3-Cone

Wide Receiver: 40-yard Dash

Quarter Back: No significant indicators

While no correlation was found between bench press performance and game performance in this study, Sierer and colleagues did find that lineman with better 225-bench press performance tended to draft higher than their weaker competitors. Knowing that specific tests carry more weight allows for potential prioritization.

D. Positional Key Performance Indicators (KPI’s)

While combine tests are valid and reliable indicators of important athletic traits, training for these tests specifically will not fully prepare an athlete to fulfill their role on the field. The combine tests themselves do not evaluate position specific skills. Therefore the CFL also incorporates positional drills and one-on-ones. This allows scouts to get a full picture of how the athlete actually performs in their position. This means that position specific demands of the athlete need to still be considered during combine preparation.

Performance Testing & Fatigue Monitoring

Initial testing allowed us to obtain the previously discussed metrics. The difficult part was going to be consistently tracking performance to understand how the athletes were responding to the training. Luckily, with them training out of the Acadia High Performance centre, access to technology allowed us to gather regular data on readiness and performance to influence training. If you choose to incorporate the use of monitoring technology into the remote coaching process, it is important that the athlete first understands how to use it so that reliable data can be obtained (as they will be the one recording and relaying this information).

Several different quantitative and qualitative measures were taken to monitor fatigue and prescribe appropriate loads in a way that would allow me as a coach to understand how the athletes were responding to training. These were:

Subjective Wellness Questionnaire: Sleep quality, mood, energy, stress, and soreness were measured subjectively using a 1-5 scale provided through the TrainHeroic training app. This allowed insight into how the athlete was feeling that day. This information was recorded before starting the warm up and communicated via text on days that scores were below 3.0 and fatigue was noticeable.

The subjective wellness questionnaire helped us gain a better understanding (retrospectively) as to how the athlete performed objectively despite changes in mood, poor sleep, general stress, etc. (as low wellness scores didn’t always equate to poor objective scores). Objective data had more influence on day-to-day volume and intensity regulation. Poor wellness scores were communicated to the coach prior to the session, at which point potential modifications would be planned if poor objective measures were also obtained.

Jump Monitoring: Both RSI and vertical jump height (arm swing and arms fixed) were used to monitor readiness and performance. RSI was more heavily considered for fatigue, while jump height served to track power output as a whole. Jump metrics were taken using a Jump Mat (Perform Better Just Jump System). One less expensive option for monitoring jumps is the MyJump app, which costs around $15. This information was performed following the warm up and communicated via text to the coach prior to beginning speed work.

Jump height and RSI was communicated to the coach after performance. If decreases in RSI of >10% were observed (based on a 3-day moving average), modifications were made at the coach’s discretion. Changes would be dependent on the focus of training that day, what was to occur on the following days, and any notable soreness by the athlete. High force and specifically tendon-heavy exercises were first to be modified, as they’re highly demanding on both tissues (muscle & tendon) and the CNS. On upper body days, our plan was to remove or decrease tempo repetition volume, which was typically performed at the end of the session). On lower body days, we would decrease sprint volume (either reps or distance), or reduce repetition volume of main lifts depending on the extent of fatigue.

Rate of Perceived Exertion (RPE): RPE was one method used to determine load selection. Given that I’m working with high level athletes, I feel comfortable giving them the authority to recognize the intensity of the set and their proximity to failure. RPE allowed for the athletes to better consider subjective readiness and day-to-day strength when performing their main strength and power exercises.

RPE was most strategically implemented with main lifts (most accessory exercises were performed to RPE 8-9). Athletes were expected to perform each set of their main lifts at a specific RPE (which would inherently regulate load and total volume). Warm up sets would help the athlete determine how strong they were feeling that day, and how much load they would need for their first set. In earlier phases, when the goal was to accumulate more volume, a top set at a specific RPE would be performed. The athlete would then remove 10% load, and perform a predetermined number of back off sets with an RPE cut off in place. In the case that the athlete would reach the same RPE as their top set during their back offs, the exercise would be completed for that day. RPE cut offs were used to recognize poor intra-set recovery and fatigue. In later phases, back off sets were removed, and fewer sets would be performed at higher average RPE (i.e., working to a top set) vs. volume accumulation.

Velocity Based Training (VBT): When targeting velocity specific strength, a GymAware device was used to regulate load around velocity. This allowed for more specific velocity-based work as well as objective load regulation and stress management.

On these speed and power focused days, athletes would be prescribed a specific velocity range to stay within, depending on the focus of the day and phase. If athletes were fatigued, load would have to be lowered (relative to average) to reach the prescribed velocity. In earlier phases, velocities were lower, and athletes would reduce weight when necessary to stay in the prescribed velocity zone while completing all volume. In later phases, higher velocities were prescribed, but load would be progressed linearly week to week. When velocity cut offs were met, the set would be stopped early.

Video Feedback: In addition, athletes would take videos of specific exercises or tests, which allowed me to provide more specific feedback. This became particularly important once specific testing found it’s way into the mix more frequently. Exercises were recorded at the beginning of each phase to ensure proper technique. For combine specific tests, specific views were recorded to give technical feedback. Shuttles were recorded so that the athlete was facing the camera in their start position and the full distance could be easily observed. Broad jump was recorded from the side. Vertical was recorded from the back or side – side to analyze loading strategy, back to analyze reach. 3-cone was recorded on a 45 degree angle from the farthest code, looking toward the start to gain a better view of movement around the 2nd and 3rd cones. Sprinting was recorded from the side to observed positions (torso angle, shin angle, arm swing, foot placement), with occasional back views taken to observe hip rotation. Bench press was recorded facing the front of the bench press to view elbow position and lockout. Feedback was provided via video call with the athlete following the session, and implemented at the next performance of the test or exercise.

Mesocycle Summary

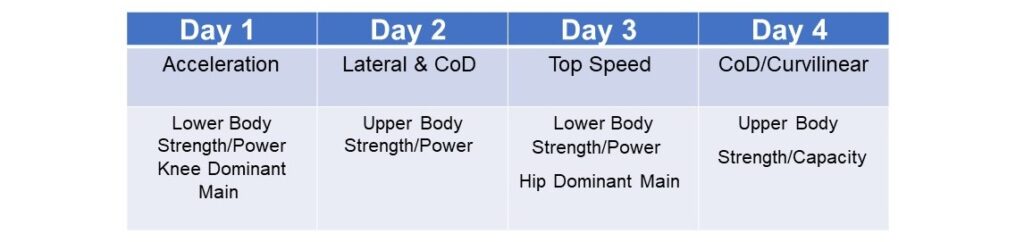

Combine prep started Monday, December 21st, which gave us almost 14 weeks until combine day. Athletes performed four training sessions per week, split up into upper body and lower body days. The intention was to replicate a high-low training model as closely as possible, understanding that the upper body days heavily revolved around bench press preparation (with bench work requiring more intensity). The first bench day focused primarily on strength and power, while the second bench day allowed for greater volume accumulation.

Acceleration and top speed work were trained primarily on lower body days, while change of direction and lateral focused work were included on upper body days. Plyometric work was kept extensive in nature until the last 4-6 weeks of prep, and greater volumes of extensive work (vs. intensive) were performed on upper body days. Most of the change of direction & lateral speed work transitioned to test specific practice the last 6 weeks of prep as well. Football specific skill work was performed separately on a fifth day, with work volume of these sessions increasing with proximity to the combine.

Training consisted of 3 x 4 week “phases”, following by a progressive 2-week taper leading into testing.

Sample Week

The “Virtual” Combine

To spice things up a little, on January 22nd, the CFL announced that the combine would now be performed “virtually”. What did this mean? All tests would be performed and recorded under specific conditions listed by the CFL, and had to be performed on a football-lined field. This was to ensure validity and consistency between athletes. While jumps would be measured at the recording site, all times were determined by the CFL based on the video footage submitted. Official times were determined by stop and start of the test (i.e., first movement through finish line), from what officials could observe. Tests were recorded from the following angles:

Shuttle: Straight on, athlete facing camera

3-Cone: Side view from start (left side of athlete)

40 yard Sprint: 45 degree angle (left)

Broad Jump: Side (right)

Bench Press: Front

*Vertical Jump: Back

*Vertical jump ended up being excluded for regional testing, but was performed anyway for extra video material to send to coaches.

One advantage that this ended up providing was a huge amount of flexibility around testing itself. Athletes were required to submit their testing results by a specific due date that was 2 weeks after the intended combine date. This could provide a couple of advantages. One, athletes could train for up to 2 additional weeks before testing. Two, athletes would retest upon getting subpar results (though this likely wasn’t the CFL’s intention). To avoid making too many adjustments to our initial plan, we ultimately opted to keep our testing date as planned on the 28th of March. All testing was performed and recorded by Acadia Football staff.

One disadvantage that came with the virtual combine was the requirement to test outside on the Acadia turf. Being in Canada, it seemed like a strange decision on behalf of the CFL to have athletes test outside during a time of year when it’s cold and windy. Conditions weren’t ideal on the actual day of testing, but the athletes felt that switching testing dates last minute would cause additional anxiety and wouldn’t have lined up with our ideal “peaking” dates. We had a back-up day planned in case we encountered rain or snow.

Reflection & Lessons Learned

The athletes ended up being very successful at the virtual combine, both ranking near the top of their position for the regional group (and holding some of the top performances in certain tests). Week 10 seemed to be the most stressful week when reflecting upon jump height differences. This makes sense, as we were finishing our second training phase and coming off a high stress week while also beginning a highly neurologically demanding phase. Highest vertical jump heights were observed during the final week of the taper, which is exactly what was intended with testing being that weekend. Day 3 most consistently produced higher vertical jump scores on average and was typically the day that personal bests were hit. It is suspected that the additional rest following the upper body day allowed for greater readiness. Observing these trends led us to perform combine testing on Sunday, as it was suspected that athlete readiness may be higher.

While I find daily testing useful for monitoring and getting athletes used to the “stress” of testing, it’s important to have your athletes understand that daily fluctuations in performance will occur. Just like bodyweight on a scale, don’t let them get too overwhelmed by changes in either direction. This happened in a couple instances where vertical jumps were down, and one athlete exhibited noticeable stress, only to hit a personal best two days later. Similarly, athletes would come in on certain days feeling sluggish, but still achieve good jump scores. For this reason I would always collect objective data before making any changes to training. While JumpMat’s aren’t accessible to everyone, cheaper alternatives are available.

Giving feedback on technical components of the tests had its challenges, especially with no objective feedback (timing) to use as a reference (often the case if they trained without a training partner). I would receive shuttle or sprinting videos after speed work was completed, and prepare feedback to present via video call later that day. I would do my best to explain each technical flaw and demonstrate over facetime if needed. The downside was not being able to give live feedback during the training itself, so corrections couldn’t be made on the spot. Luckily, the athletes did have access to a speed coach who worked with them several times leading up to combine day in order to perfect their sprint technique.

It was very clear that the virtual combine came with pros and cons, and the validity of this year’s results will forever be hard to compare to previous years. There is always be the possibility of cheating when officials aren’t carefully watching over. In the case of the 40-yard dash, it is possible that the athlete could start farther forward, as the camera view is quite distant compared to other tests. Recording and sending in multiple angles may be a useful way prevent cheating on these tests. However, the longer we live in a restricted society, the more adaptable we need to be in creating a more valid testing experience.

Online coaching clearly poses many difficulties and potential shortcomings compared to an in person coaching experience. However, with more innovation in the sport-tech space and development of cheaper mobile-based testing and monitoring tools, sport science backed online coaching is becoming an immediate reality. It can clearly be an effective tool in providing a comparable experience, and is both more affordable and accessible to most student athletes. Ultimately, an effective online coaching experience comes from 1) Knowing your athlete, 2) knowing what to test and observe, and 3) how to use those observations to influence training. Lastly, having the athlete see and feel the results of their training builds trust, and further strengthens the relationship between coach and athlete. When you can demonstrate that you’re 100% invested in an athlete, they’ll continue to invest in you.

Author Bio: Ian is a graduate of the University of Regina, and is currently a high performance strength & conditioning coach with Esports Health & Performance. Additionally, he is serving as an Advisory Team Member for the CSCA.

References

Hartman, M. (2011). Competitive performance compared to combine performance as a valid predictor of NFL draft status. The Journal of Strength & Conditioning Research, 25, S105-S106.

Markovic, G., Dizdar, D., Jukic, I., & Cardinale, M. (2004). Reliability and factorial validity of squat and countermovement jump tests. The Journal of Strength & Conditioning Research, 18(3), 551-555.

McGee, K. J., & Burkett, L. N. (2003). The National Football League combine: a reliable predictor of draft status?. The Journal of Strength & Conditioning Research, 17(1), 6-11.

Sierer, S. P., Battaglini, C. L., Mihalik, J. P., Shields, E. W., & Tomasini, N. T. (2008). The National Football League Combine: performance differences between drafted and nondrafted players entering the 2004 and 2005 drafts. The Journal of Strength & Conditioning Research, 22(1), 6-12.